Interactive Multisensory Object Perception for Embodied Agents (Symposium)

Symposium at the AAAI Spring Symposium Series in 2017 at Stanford University.

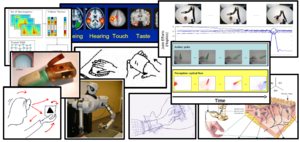

For a robot to perceive object properties with multiple sensory modalities, it needs to interact with the object through action. This interaction requires that an agent be embodied (i.e., the robot interacts with the environment through a physical body within that environment). A major challenge is to get a robot to interact with the scene in a way that is quick and efficient. Furthermore, learning to perceive and reason about objects in terms of multiple sensory modalities remains a longstanding challenge in robotics. Multiple lines of evidence from the fields of psychology and cognitive science have demonstrated that humans rely on multiple senses (e.g., audio, haptics, tactile, etc.) in a broad variety of contexts ranging from language learning to learning manipulation skills. Nevertheless, most object representations used by robots today rely solely on visual input (e.g., a 3D object model) and thus, cannot be used to learn or reason about non-visual object properties (weight, texture, etc.).

This major question we want to address is, how do we collect large datasets from robots exploring the world with multi-sensory inputs and what algorithms can we use to learn and act with this data? For instance, at several major universities, there are robots that can operate autonomously (e.g., navigate throughout the building, manipulate objects, etc.) for long periods of time. Such robots could potentially generate large amount of multi-modal sensory data, coupled with the robot's actions. While the community has focused on how to deal with visual information (e.g., deep learning for visual features from large scale databases), there has been far fewer explorations of how to utilize and learn from the very different scales of data collected from very different sensors. Specific challenges include the fact that different sensors produce data at different sampling rates and different resolutions. Furthermore, data produced by a robot acting in the world is typically not independently and identically distributed (a common assumption of machine learning algorithms) as the current data point often depends on previous actions.

This eymposium is co-organized with Vivian Chu, Jivko Sinapov, Sonia Chernova and Andrea Thomaz.

Details

- 27 March 2017 • 9:00 - 29 March 2017 • 13:00

- Stanford University

- Autonomous Motion