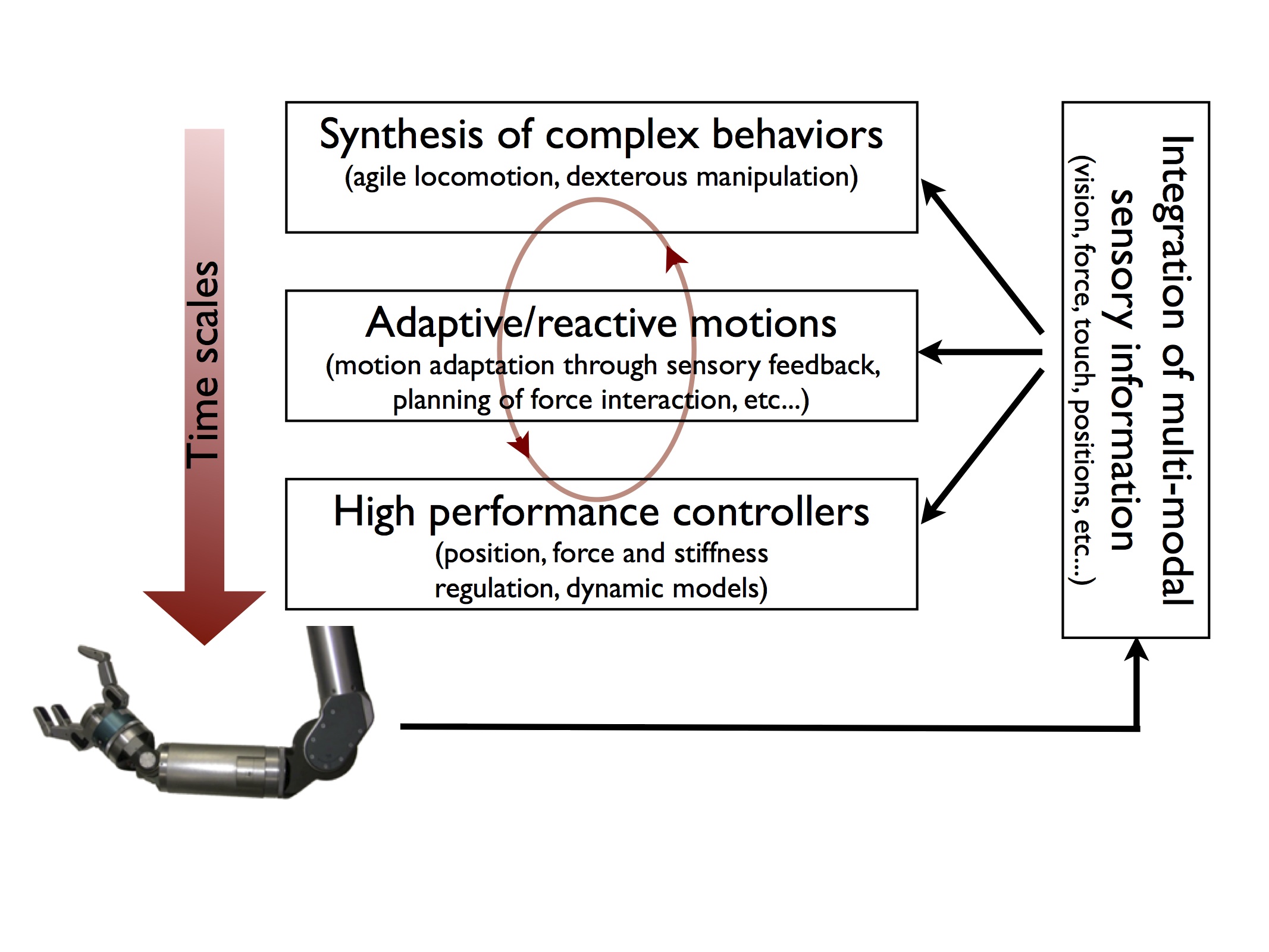

Overview of the principal research directions of this research topic and their interactions

In this research area, we are focused on developing control and planning algorithms for the generation of movements in autonomous robots and especially in robots with hands and legs. We develop algorithms that allow machines to move and interact with their environment in a non-trivial manner. Robots should be able to locomote on difficult terrains and manipulate objects to perform complicated tasks and in many cases they should be able to do both of these tasks at the same time. The generation and control of coordinated movements for a robot that performs several tasks at the same time is a complex problem that involves very different research areas. First, one needs to develop adequate controllers that will make sure the robots perform according to the desired plan. Then, motion generation algorithms are required to create desired motion or contact interaction policies, ideally in an optimal way. Finally, complex behaviors such as locomotion on rough unknown terrains or dexterous manipulation must be synthesized to coordinate these lower level algorithms. Furthermore, all these algorithms are expected to work with each other and they need to integrate the information available from the sensors to improve performances and reactivity in unpredictable environments. With this goal in mind, our research follows several complementary directions from high performance force control to optimal control and planning and inverse reinforcement learning.

High performance control Performing challenging tasks require very good controllers in order to execute desired plans. While often underestimated, carefully designed controllers can significantly increase performances by optimally exploiting the possibilities offered by modern hardware (e.g. torque actuation, high control bandwidth, multi-modal sensing). In general, we are interested in controlling very different physical quantities (positions, velocities, forces or impedance) of various robot parts (joints, end-effectors or the entire robot’s motion) to achieve several concurrent objectives. For example, while drilling a hole in a wall, it is necessary to control the drill tip position for precise drilling and interaction forces to bore the wall while at the same time the robot’s center of mass needs to be controlled for proper balancing in face of disturbances created by the manipulation task. In order to develop high performance controllers, it is necessary to understand the physical processes underlying the actuation and the dynamics associated with the tasks the robot tries to achieve. To develop our controllers, we often use (complex or simplified) models based on rigid-body dynamics and augmented with hardware specific modeling (e.g. hydraulic dynamics for hydraulic actuated robots). The developed controllers need to be robust to uncertainties in the model estimation, computationally simple to be implemented in fast control loops and guarantee some form of optimality and robustness to disturbances.

Adaptive/reactive motions Traditional motion planning generally computes a motion plan given a model of the environment and then lets the controller execute the plan. If an unexpected event happens a new plan needs to be computed using an updated model of the world, which is potentially time consuming. We are researching ways to create intermediate motion representations between the motion plan and the controller that can be used to integrate sensory information during the motion generation. In close relation with the perception for action research conducted in the department, we are investigating ways to integrate sensory feedback during the generation of movements. When unexpected events occur, the motion can then be adapted to ensure successful execution of the task. Ideally, we would like to have algorithms able to integrate task relevant sensory information at every level of planning and control. The approaches we are investigating range from model predictive control (where explicit models are required) to dynamic movement primitives with sensory feedback (where models are made implicit).

Multi-time-scale integration Planning and control are often though as separate problems. We believe that tight coupling between planning and control algorithms is necessary to achieve complex tasks. The design of a controller depends on the requirement of the planned policies and conversely, the capabilities of the controller define what can be planned. For example, a controller allowing to control ground reaction forces during locomotion will allow a planner to exploit these forces to create better motion. Such integration becomes specially relevant when generating very dynamic moves such as jumping to grasp for an out-of-reach object. We are developing methods that consider motion generation as an integrated problem where several algorithms operating at different time-scales are involved. Typically, at one end of the scale we will find fast control loops operating at the millisecond level or below, while at the other end we will find planners for symbolic reasoning operating at the seconds or minutes level. Designing algorithms for different time-scales allow to simplify the conception of these algorithms as their design can be separated. However, by requiring some coupling between them we believe that it will allow the emergence of complex behavior (i.e. behaviors more complex than the sum of each module) when interacting with the external world.

Sensor information integration We expect to see a dramatic increase of available sensors on modern robotic platform. However, we hardly know how to fully exploit the information created by available sensors on our current hardware. Usually motion planning is done with an a-priori model of the environment, without online integration of sensor information. On the other hand, control systems typically use sensor information that directly relates to the physical quantities to be controlled (e.g. collocated position or force sensors). However, very few algorithms are capable of integrating complex sensor modalities coming from active perception algorithms, nor capable of exploiting the richness of information available in these sensors to make sensible control or planning decisions. One of our research goal is to use more systematically all the sensory information available to improve motion generation and control. This research direction is done is close collaboration with the perception for action research conducted in the department.

Complete systems for complex behaviors It is not sufficient to develop theoretically sound algorithms but it is mandatory to demonstrate that such algorithms, when integrated in a complete system, interact appropriately. We are therefore developing real-life complex scenarios to test the algorithms. First, we are developing a complete system for legged locomotion over rough terrain (e.g. disaster relief scenario). Second, we are testing our algorithms within a complete system for bi-manual manipulation available in the department. Robotic experiments are not only used to validate our algorithms but also to help us understand what are the real challenges yet to be solved by our future algorithms. These developments go beyond mere motion planning and control and involve a tight integration of all the research topics developed in the department.