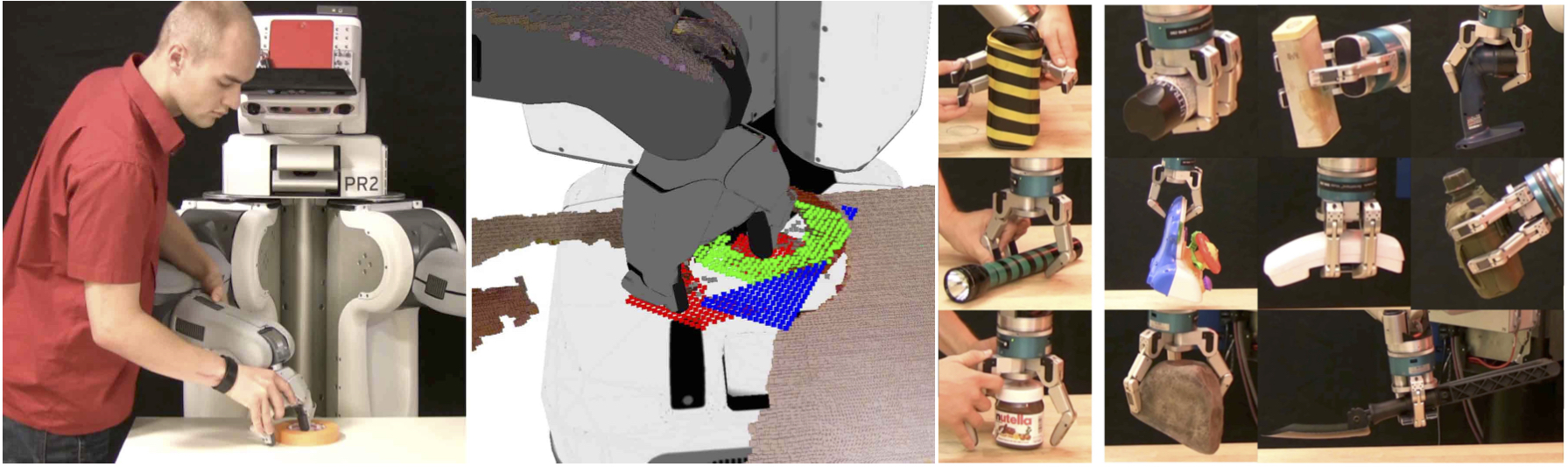

A user demonstrates a grasp to the robot (left). A representation of the object shape is stored together with the gripper configuration in a database (second from left). Novel objects that are not known to the robot are grasped using a set of user demonstrated grasp templates (right).

Autonomous robotic grasping is one of the pre-requisites for personal robots to become useful when assisting humans in households. Seamlessly easy for humans, it still remains a very challenging task for robots. The key problem of robotic grasping is to automatically choose an appropriate grasp configuration given an object as perceived by the sensors of the robot. An algorithm that autonomously provides promising grasp hypotheses has to be able to generalize over the large variations in size and geometry of everyday objects (cf. figure above).

Luckily, objects, even when they differ in shape, are likely to have similarly formed sub-parts that can be grasped with a common grasp strategy. For example, the shovel and the flash light in the figure above can be grasped at the cylindrical middle part, even though the two objects are not similar entirely. Exploiting this property, our algorithm allows for creating a user-demonstrated library of template grasps containing a local shape representation of the object together with a gripper configuration that lead to a successful grasp execution. When presented with an unknown object, a robot can then find sub-parts that match previously presented templates. The gripper configuration associated to the best matching shape-template is then executed on the novel object.

Our method allows for a high success rate on two very different robots. However, having only positive examples in our library, the robot is not always able to pick up an object. Thus, in addition, we allow to store negative examples in cases where a grasp hypothesis did not lead to a successful execution. Our method then trades off two objectives. On the one hand we want to place the gripper at object-parts that are similar to good user demonstrations. On the other hand the robot is to avoid sub-parts that look like previously failed attempts. Indeed, even when the robot failed to grasp an object, it tried again and eventually succeeded, because it was learning from its failures.