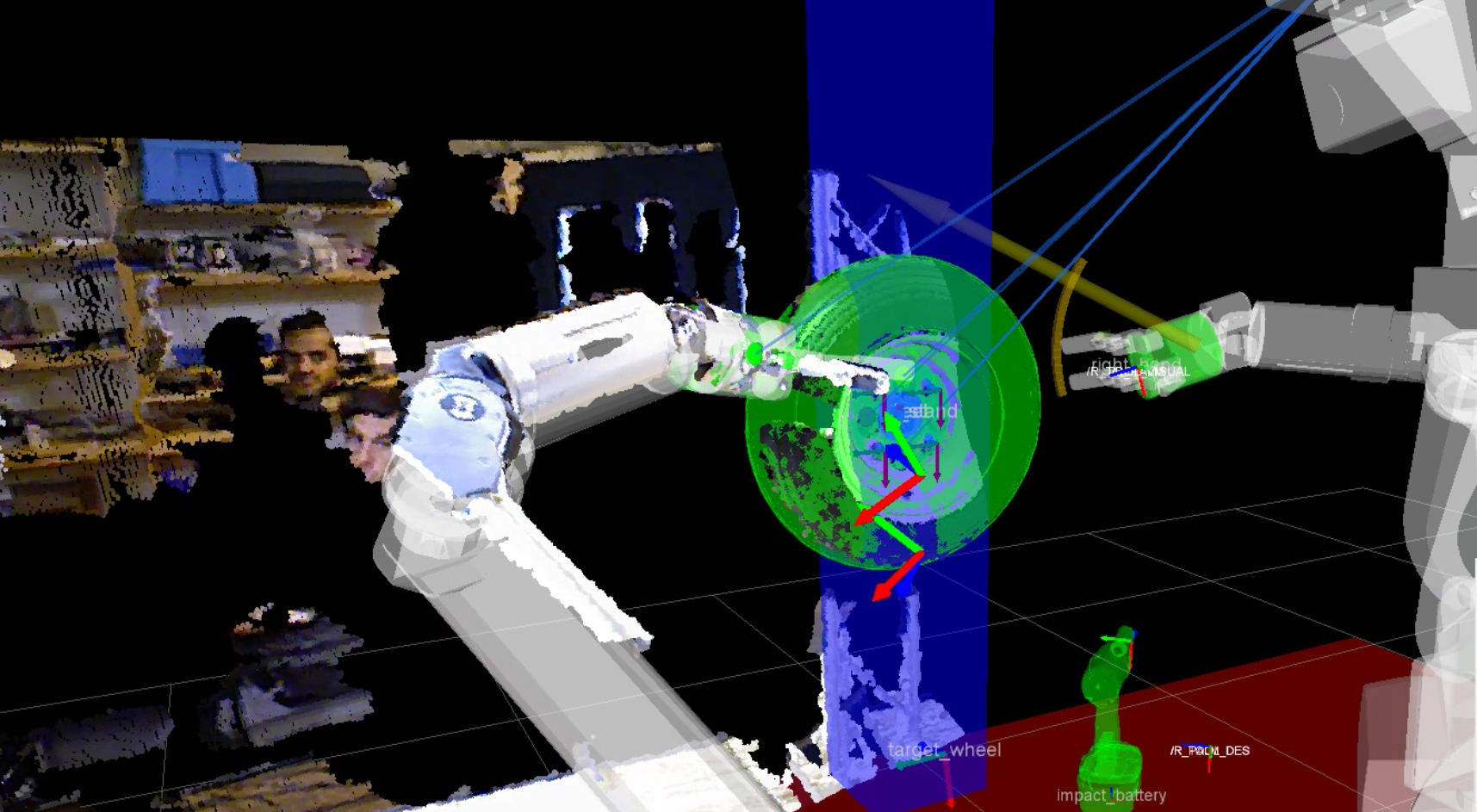

A dual arm robotic platform performing a tire change. The relevant objects for this scenario are detected and tracked using a head-mounted Kinect camera. The objects involved here are the tire and the impact wrench (in green), the stand of the tire (in blue) and of course the hands and arms of the robot.

Hand-eye coordination is crucial for capable manipulation of objects. It requires to know the manipulator's and the objects' locations. These locations have to be inferred from sensory data. In this project we work with range sensors, which are wide spread in robotics and provide dense depth images.

The objective is to continuously infer the 6-DoF poses of all objects involved at the frame rate of the incoming depth images. This includes objects the robot is interacting with, as well as the links of its own manipulators. This problem poses a number of challenges that are difficult to address with standard Bayesian filtering methods:

- The measurement, i.e. the dense depth image, is high-dimensional. We therefore investigate how approximate inference can be performed efficiently, e.g. by imposing factorization in the pixels.

- Measurements come from multiple modalities, at different rates and with a relative delay. We propose filtering methods that leverage the available knowledge to a maximum. [ ]

- The measurement process is very noisy. We are working on robustification of Kalman Filtering methods. [ ] [ ]

- Occlusions of objects are pervasive in the context of manipulation. We developed a model of the depth image generation which takes occlusion explicitly into account which proved to greatly improve robustness. [ ]

- The state is high dimensional if many objects are involved, or if the robot has many joints. Therefore, we have worked on an extension of the Particle Filter which scales better with the dimensionality of the state space, for certain dynamical systems. [ ]

Our algorithms are released as open source code and they are tested on datasets annotated with ground truth. Furthermore, the algorithms developed provide a basis for research on robotic manipulation. We have shown their integration into full robotic systems.

Simultaneous Object and Manipulator Tracking

We show our real-time perception methods integrated with reactive motion generation [ ] on a real robotic platform performing manipulation tasks.

Robust Probabilistic Robot Arm Tracking

We propose probabilistic articulated real-time tracking for robot manipulation [ ].

This video visualizes the performance given different sensory input to estimate the pose and joint configuration of a robot arm. Perfect performance is achieved if the colored overlay matches the arm in the image.

Robust Probabilistic Object Tracking

We developed a set of methods that is robust to strong and long terms occlusions and noisy, high-dimensional measurements. The following video visualizes our object tracking method for robust visual tracking under strong occlusions that is based on a particle filter [ ].

Open Source Code

We released our methods as open source code on github in the Bayesian object tracking project.

We provide an easy entry point on our getting-started page.

Data Sets

We also provide data sets that allow quantitative evaluation of alternative methods. They contain real depth images from RGB-D cameras and high-quality ground truth annotations collected with a VICON motion capture system.

Robot Arm Tracking

The below pictures shows three samples of the data set that were recorded on our robot Apollo. Sequences contain situations with fast to slow robot arm as well as camera motion and none or very severe, long-term occlusions.

![]()

For downloading the data set and further details we refer to the github pages.

Object Tracking

The below picture shows each object that is contained in the data set.

![]()

For downloading the data set and further details we refer to the github pages.