The Autonomous Motion Department has its focus on research in intelligent systems that can move, perceive, and learn from experiences. We are interested in understanding, how autonomous movement systems can bootstrap themselves into competent behavior by starting from a relatively simple set of algorithms and pre-structuring, and then learning from interacting with the environment. Using instructions from a teacher to get started can add useful prior information. Performing trial and error learning to improve movement skills and perceptual skills is another domain of our research. We are interested in investigating such perception-action-learning loops in biological systems and robotic systems, which can range in scale from nano systems (cells, nano-robots) to macro systems (humans, and humanoid robots).

One part of our research is concerned with learning in neural networks, statistical learning, and machine learning, since the ability of learning and self-organization seems to be among the most important prerequisites of autonomous systems.

Another part of the research program focuses on how movement can be generated, in particular in human-like systems with bodies, limbs, and eyes. This research touches the fields of control theory, nonlinear control, nonlinear dynamics, optimization theory, and reinforcement learning.

In a third research branch, we investigate perception, in particular 3D perception with vision, tactile, and acoustic senses. A special emphasis lies on understanding active perception processes, i.e., how action and perception can assist each other for better performance and robustness.

A fourth component of our work is concerned with human performance by measuring their movements in specially design behavioral tasks, and also by measuring their brain activities with neuroimaging techniques. Such research connects closely to work in Computational Neuroscience for motor control, and it includes abstract functional models of how brains may organize sensorimotor coordination.

Finally, a large part of the research in lab emphasizes studies with actual humanoid and biologically inspired robots. With this work, we are first interested in testing our learning and control theories with real physical systems in order to evaluate the robustness of our research results. Another challenge arises due to the scalability of our methods towards complex robot: our most advanced robot requires the nonlinear control of over 50 physical degrees of freedom that need to be coordinated with visual, tactile, and acoustic perception. When attempting to synthesize behavior with such a machine, the shortcomings of state-of-the-art learning and control theories can be discovered and addressed in subsequent research. Finally, we also use humanoid robots for direct comparisons in behavioral experiments in which the robot is treated like a regular human subject.

Please see below for more information on the current research areas and projects at the Autonomous Motion Department.

Learning Control

When robots and other autonomous systems are to be used in complex environments such as outdoors, we cannot foresee every possible situation that the robot needs to cope with. Thus, rigidly pre-programming robots is not a viable solution. Instead, the robot must be able to adapt and learn. The Autonomous Motion Department de... Read More

Perception for Action

In this research area, we are interested in the question of how a robot can leverage the continuous stream of multi-modal sensor data to act robustly in an uncertain and dynamically changing world. At the Autonomous Motion Department, we see perceptuo-motor skills as units in which motor control and ... Read More

- Autonomous Robotic Manipulation

- Modeling Top-Down Saliency for Visual Object Search

- Interactive Perception

- State Estimation and Sensor Fusion for the Control of Legged Robots

- Probabilistic Object and Manipulator Tracking

- Global Object Shape Reconstruction by Fusing Visual and Tactile Data

- Robot Arm Pose Estimation as a Learning Problem

- Learning to Grasp from Big Data

- Gaussian Filtering as Variational Inference

- Template-Based Learning of Model Free Grasping

- Associative Skill Memories

- Real-Time Perception meets Reactive Motion Generation

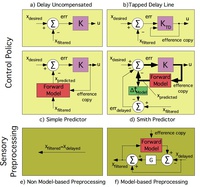

Motion planning and control

In this research area, we are focused on developing control and planning algorithms for the generation of movements in autonomous robots and especially in robots with hands and legs. We develop algorithms that allow machines to move and interact with their environment in a non-trivial manner. Robots should... Read More

- Autonomous Robotic Manipulation

- Learning Coupling Terms of Movement Primitives

- State Estimation and Sensor Fusion for the Control of Legged Robots

- Inverse Optimal Control

- Motion Optimization

- Optimal Control for Legged Robots

- Movement Representation for Reactive Behavior

- Associative Skill Memories

- Real-Time Perception meets Reactive Motion Generation

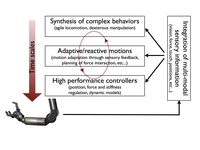

Neural Control of Movement

Given that humans are the living example that robust and intelligent preceptuo-motor behaviors can be realized at an amazing level of performance, it has always been a most interesting research topic to investigate principles of perceptuo-motor control in human an non-human primates. The Autonomous Motion ... Read More

Experimental Robotics

In addition to fundamental theoretical research, the Autonomous Motion Department emphasizes experiments with actual systems, in particular anthropomophic and humanoid robots. Two main platforms in use for research are Apollo, a dual-arm manipulation platform, and Athena, a comple... Read More