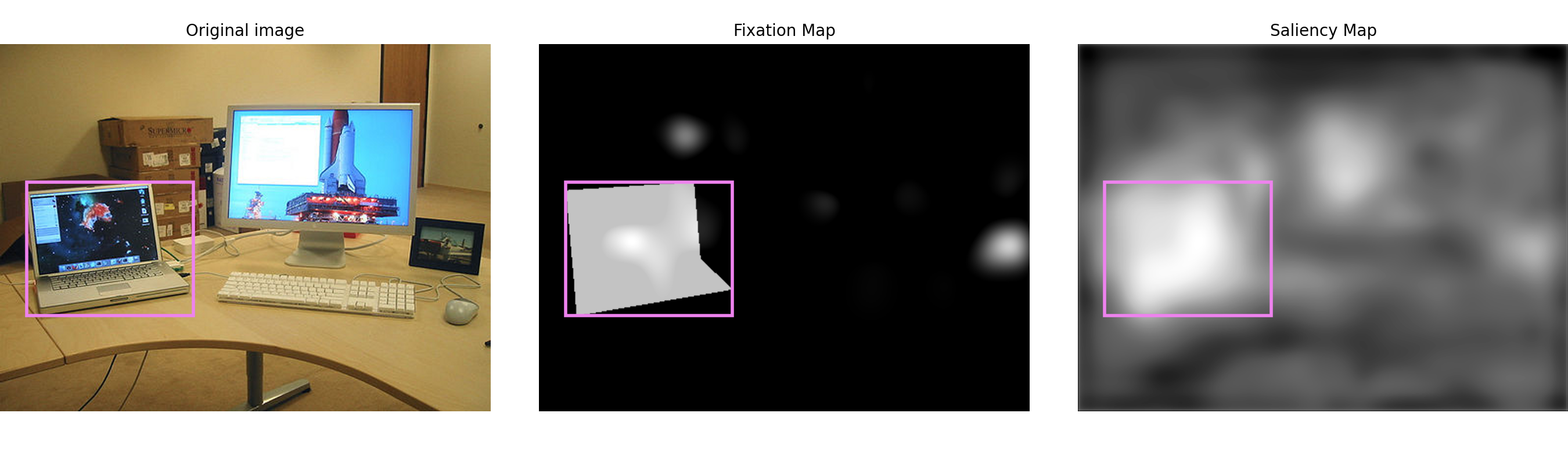

Searching for a given target object in a scene not only requires detecting the target object if it is visible, but also to identify promising locations for search if not. The quantity that measures how interesting an image region is given a certain task is called top-down saliency.

Saliency predictions are mostly used to reduce the computational burden of image processing: Instead of applying expensive processing steps like object classification exhaustively over the whole image, we can use saliency maps to chose a small set of salient regions for further processing and ignore other, uninteresting image parts

We investigate whether Convolutional Neural Networks can be trained on human search fixations to predict top-down saliency for search. To this end, we collected a dataset of fixations from 15 participants who searched for objects from three different categories. As the data also contains a lot of task-unrelated fixations, we developed different methods to facilitate the learning of task-specific behavior from it. We also evaluated the benefit of training on fixation data versus using the segmentation masks of target objects for training and showed that saliency predictions from fixation-trained models give better results for pruning region proposals.